Qingyu Zhang

Master Student of Computer Science and Technology

Institute of Software Chinese Academy of Sciences

Biography

I’m Qingyu Zhang, a first-year master’s student at Chinese Information Processing Laboratory in the Institute of Software Chinese Academy of Sciences. My research focuses on large language models, including long-text capabilities and multi-turn dialogue abilities.

Interests

- LLM Long Context

- LLM Compression & Efficiency

- LLM Post-training

- LLM Reinforcement Learning

Education

M.S. in Computer Science and Technology, 2024 - Present

Institute of Software Chinese Academy of Sciences

B.S. in Computer Science and Technology, 2020 - 2024

College of Computer and Data Science, Fuzhou University

Experience

Algorithm Intern

- Led the R&D of an RL-based dialogue optimization system for large models.

- Deployed in a live business environment, increasing core business conversion rate by 10%~20%.

- Research submitted to AAAI 2026.

Foundation Model Intern

- Investigated Transformer redundancy and proposed a layer-based pruning method (ShortGPT, ACL Findings, 2025).

- Researched the lower bounds of RoPE Base (Base of RoPE Bounds Context Length, NeurIPS, 2024).

- Proposed a variant of the “Needle in a Haystack” evaluation method (Patent Granted).

Research Intern

- Adapted and optimized SFT/DPO algorithms for the Megatron framework (ACL Demo, 2025).

- Implemented large-scale distributed training on Ascend 910b using the ModelLink framework.

Publications

(2025).

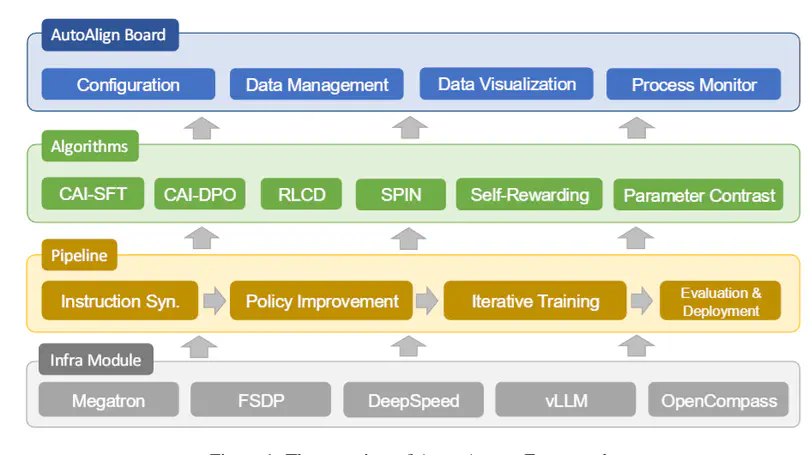

AutoAlign: Automated Alignment for Large Language Models.

In ACL Demo 2025.

(2025).

ShortV: Efficient Multimodal Large Language Models by Freezing Visual Tokens in Ineffective Layers.

In ICCV 2025.

(2025).

ShortGPT: Layers in Large Language Models are More Redundant Than You Expect.

In ACL Findings 2025.

(2024).

Base of RoPE Bounds Context Length.

In NeurIPS 2024.

Projects

A unified pruning toolkit for AI models. Currently includes ShortGPT and ShortV for efficient layer pruning.

An open-source toolkit for automated alignment of Large Language Models. I was responsible for adapting and optimizing SFT/DPO algorithms for the Megatron framework.

Awards

- Jun, 2024 Honored as an Outstanding Graduate at Fuzhou University.

- May, 2023 Won the First Prize in the 10th ASC Student Supercomputer Challenge.

- Nov, 2022 Won the First Prize in the 13th National College Student Mathematics Competition.

Contact

- ttraveller2001@gmail.com

- No. 4, South Fourth Street, Zhongguancun, Beijing, Haidian District 100190